Cloud computing is changing everything about electronic design, according to Jeff Bier, founder of the Edge AI and Vision Alliance. That’s because more and more problems confronting designers are getting solved in the cloud.

As part of our regularly scheduled calls with EDN’s Editorial Advisory Board, we asked Bier what topics today’s electronics design engineers need more information on. Bier highlighted the cloud as the number one force driving change in engineering departments around the world. However, you could be forgiven for asking whether cloud computing has anything to do with electronic design at all.

“[The cloud] has everything to do with almost every aspect of electronic design,” Bier said, adamant that it is drastically changing the way engineers work.

Code generation

Bier noted that the traditional engineer’s sandbox, Matlab, introduced a feature more than a decade ago that generated code for the embedded target processor in one step. One underappreciated implication of that feature was that any choice of processor might then be influenced by which processors were supported by Matlab for code generation.

Previously, an embedded DSP engineer took the Matlab code from the algorithm engineer and re-coded the entire thing in assembly language (more likely to be C or C++ today). With Matlab’s code generation, this step could be cut out of the process, and time and money could be saved, but only by switching to a processor that was supported by Matlab.

Today, in the realm of AI and deep neural networks, the majority of algorithms are born in the cloud, using open source frameworks like TensorFlow and Pytorch. They are implemented on embedded processors in a variety of ways with a variety of tools.

“You can bet that going forward, a big factor in which are the preferred processors is going to be which processors have the easiest path from [the cloud to the embedded implementation],” Bier said. “Whose cloud has the embedded implementation button and which processors are supported? That’s the cloud that’s going to win. And that’s the embedded processor [that wins]… if it works, people are going to do that, because it’s a heck of a lot easier and faster than writing the code yourself.”

Bier highlights Xnor, the Seattle deep learning company acquired by Apple, which had this process licked.

“They fetched a nice price from Apple, because Apple understands the value of rapid time to market,” he said.

Bier sees many aspects of embedded software heading to the cloud. Many EDA tools are already cloud-based, for example.

“You’ll see similar things where I build my PCB design in the cloud, then who has the “fab me ten prototypes by tomorrow” button?” Bier said.

While many are yet to appreciate the cloud’s significance to the electronic design process, this change is happening fast, partly thanks to the scale of today’s cloud companies.

Bier cites the FPGA players’ historic attempts to make FPGAs easier to program, which he said was finally solved by Microsoft and Amazon, who today offer FPGA acceleration of data parallel code in the cloud.

“All you need is your credit card number… you press the FPGA accelerate button and it just works,” he said. “Microsoft and Amazon solved this problem because they had the scale and the homogeneous environment — the servers are all the same, it’s not like a million and one embedded systems, each slightly different. And they solved problems that [the FPGA players] never could. This is one of the reasons why the cloud is becoming this center of gravity for design and development activity.”

So, what can chip makers do to influence cloud makers to develop code generation functionality for their processors?

“Amazon, Google and Microsoft don’t care whose chip the customer uses, as long as they use their cloud. So [the chip maker is] the only one that cares about making sure that its chip is the one that’s easiest to target,” he said. “So I think they really need both – they really need to work with the big cloud players, but they also need to do their own thing.”

Bier notes that Intel already has its popular DevCloud, a cloud-based environment where developers can build and optimize code.

“It’s the next logical step, where all the tools and development boards are connected to Intel servers,” he said. “There’s no need to wait for anything to install or wait for any boxes to arrive.”

Edge vs. cloud

Another concept that today’s embedded developers really should be well-versed in, Bier said, is edge compute (edge compute refers to any compute done outside the cloud, at the edge of the network). Since more embedded devices now have connectivity as part of the IoT, each system will have to strike a careful balance between what compute is done in the cloud and what is done at the edge for cost, speed or privacy reasons.

“Why do I care, if I’m an embedded systems person? Well, it matters a lot,” Bier said. “If the future is that embedded devices are just dumb data collectors that stream their data to the cloud, that’s frankly a lot less interesting and a lot less valuable than if the future is sophisticated, intelligent embedded devices running AI algorithms and sending findings up to the cloud, but not raw data.”

Bier’s example is a baby monitor company, working on a smart camera to monitor a baby’s movements, breathing and heart rate. Should the intelligence go into the embedded device, or into the cloud?

“Placing the intelligence in the cloud means that if the home internet connection fails, the product doesn’t work,” Bier said. “But by launching the baby monitor [with intelligence in the cloud], they’re able to get the product to market a year faster, as it is then purely a dumb Wi-Fi camera… they didn’t have to build a purpose-built embedded system.”

By keeping intelligence in the cloud, the baby monitor company is also able to iterate its algorithm quickly and easily. Once a reasonable level of deployment is reached, it can do A/B testing overnight: deploy the new algorithm to half their customers, see which algorithm works better, and then deploy to everyone. Deploying a new algorithm can be done with only a few key presses.

“The point is, there are huge implications for what [intelligence] is in the endpoint device, and what’s in some kind of intermediate node, like a device that’s connected to your router or that’s on the operator’s pole down the street, or in the data center,” Bier said. “But this is outside the scope of what most embedded systems people think about today.”

The baby monitor company, following a successful cloud-based launch is now shipping monitors in volume. Bier notes that the company has therefore become more cost-sensitive, and doesn’t need to iterate the algorithm as much, so is looking into building a second-generation product which uses mostly edge processing.

Do you need a DNN?

Another rapidly growing field clearly changing the way embedded systems work is artificial intelligence.

Surveys carried out by the Edge AI and Vision Alliance reveal that deep neural networks (DNNs), the basis for artificial intelligence, have gone from around 20% to around 80% adoption in embedded computer vision systems in the last five years.

“People are struggling with two things,” Bier said. “One is that actually getting them to work for their application is really hard. The other thing is figuring out where they should actually use deep neural networks.”

DNNs have become fashionable and everyone wants to use them, but they are not necessarily the best solution for many problems, Bier noted. For many, classical techniques are still a better fit.

“How would you recognize a problem that is suitable for solving with deep neural networks versus other classical techniques?” Bier said. “Embedded systems people, hardware and software people, really need to have a better grip on this. Because if you’re going to run deep neural networks, it has a big impact on your hardware — you need a tremendous amount of performance and memory compared to classical, hand engineered algorithms.”

The post How Cloud Computing Is Transforming Electronic Design appeared first on EE Times Asia.

from EE Times Asia https://ift.tt/2Mg7RIm

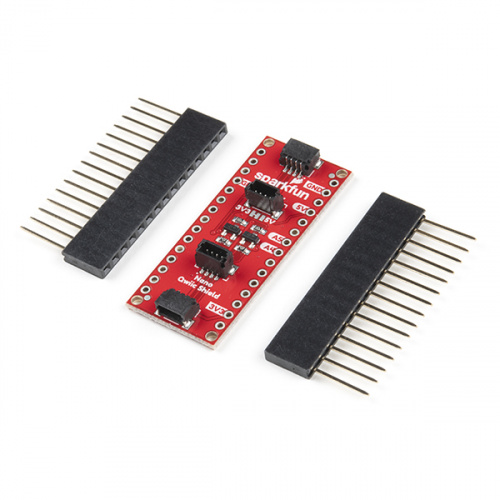

Traditional key-based locks are now outdated and come with limitations of their own. First, you always have to carry the key with you, and the key might get misplaced or stolen. Such locks are not completely secure either. These old locks are now being replaced by modern biometric ones. However, biometric locks use biometric sensors […]

Traditional key-based locks are now outdated and come with limitations of their own. First, you always have to carry the key with you, and the key might get misplaced or stolen. Such locks are not completely secure either. These old locks are now being replaced by modern biometric ones. However, biometric locks use biometric sensors […]

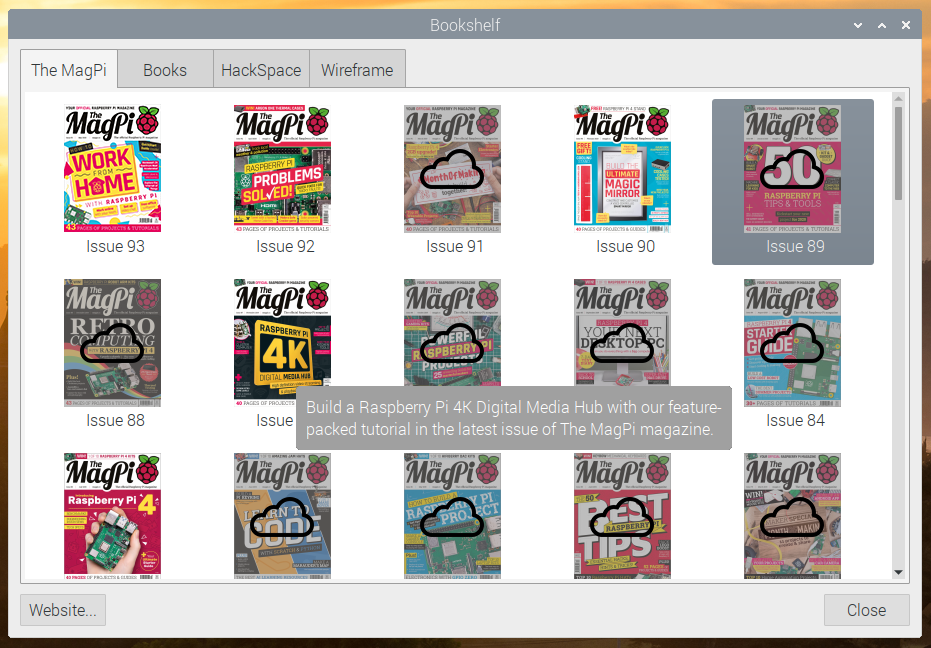

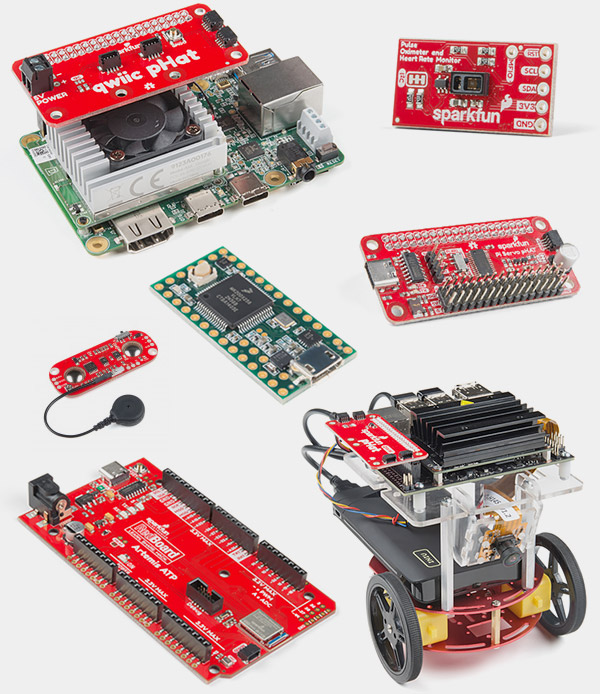

An increased memory capacity makes the board ideal for enhanced processing of data-intensive applications Retains all the essential features of the already available Raspberry Pi 4 boards Simply within a year since its launch, the Raspberry Pi 4 witnessed a huge jump in sales, thanks to the many enhancements it underwent such as reduced idle […]

An increased memory capacity makes the board ideal for enhanced processing of data-intensive applications Retains all the essential features of the already available Raspberry Pi 4 boards Simply within a year since its launch, the Raspberry Pi 4 witnessed a huge jump in sales, thanks to the many enhancements it underwent such as reduced idle […]

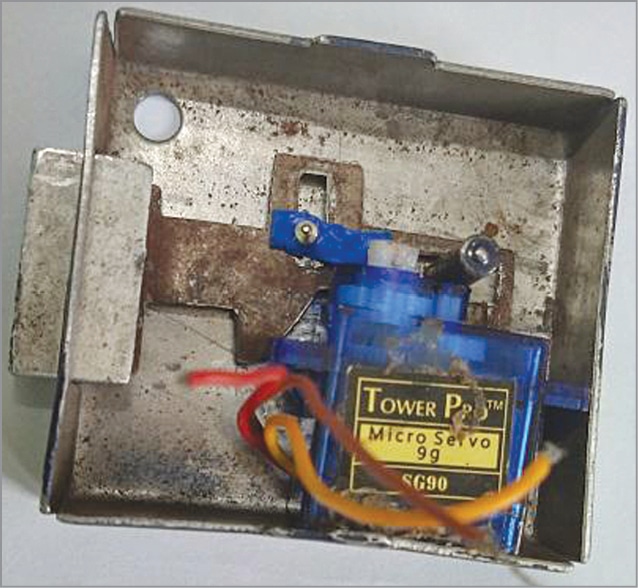

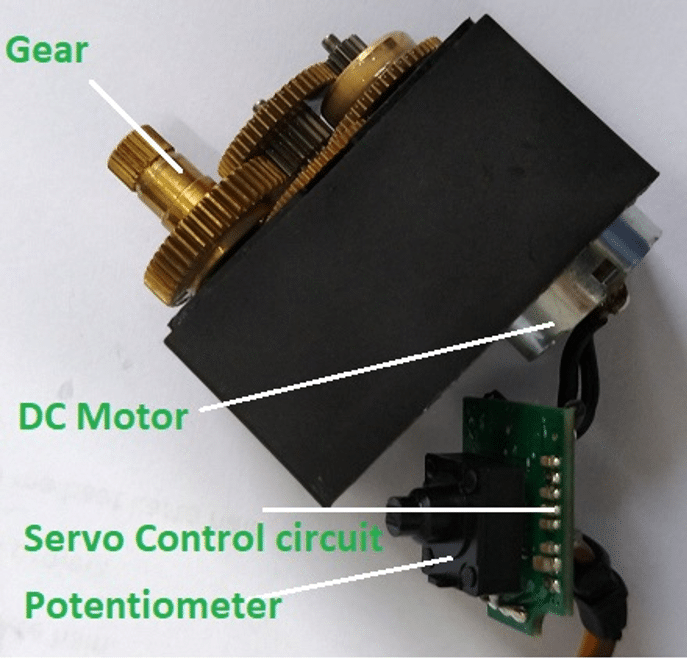

Often one gets to hear the word “Servo Motor” in electronics. You might have used it many times in your projects as well. But what is inside it and how does it work? Let’s find out. Servo motor is basically a type of motor that allows us to control the position, acceleration and velocity while […]

Often one gets to hear the word “Servo Motor” in electronics. You might have used it many times in your projects as well. But what is inside it and how does it work? Let’s find out. Servo motor is basically a type of motor that allows us to control the position, acceleration and velocity while […] There is no accepted or standard definition of good artificial intelligence (AI). However, good AI is one that can guide users understand various options, explain tradeoffs among multiple possible choices and then help make those decisions. Good AI will always honour the final decision made by humans. It is a common phenomenon that if you […]

There is no accepted or standard definition of good artificial intelligence (AI). However, good AI is one that can guide users understand various options, explain tradeoffs among multiple possible choices and then help make those decisions. Good AI will always honour the final decision made by humans. It is a common phenomenon that if you […] Provides industrial operators flexibility to work quickly and remotely without extensive re-wiring Optimal for adapting to the changes brought about by Industry 4.0 Traditional control systems require costly and labour-intensive manual configuration, with a complex array of channel modules, analogue and digital signal converters and individually wired inputs/outputs to communicate with the machines, instruments and […]

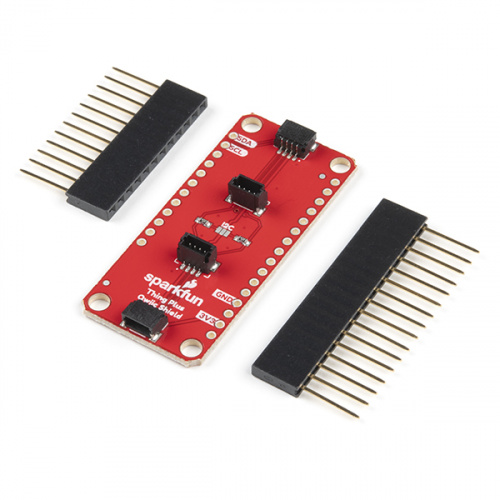

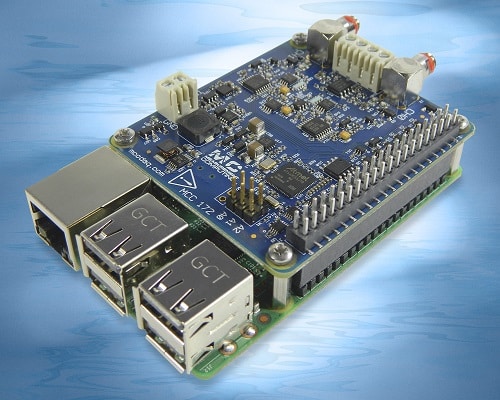

Provides industrial operators flexibility to work quickly and remotely without extensive re-wiring Optimal for adapting to the changes brought about by Industry 4.0 Traditional control systems require costly and labour-intensive manual configuration, with a complex array of channel modules, analogue and digital signal converters and individually wired inputs/outputs to communicate with the machines, instruments and […] Ideal for Machine Condition Monitoring and Edge Computing applications Is an open-source solution that allows users to develop applications on Linux Measurement Computing Corporation, a designer and manufacturer of data acquisition devices has announced the release of the MCC 172 Integrated Electronic Piezoelectric (IEPE) Measurement Hardware Attached on Top (HAT) for Raspberry Pi. Ideal for […]

Ideal for Machine Condition Monitoring and Edge Computing applications Is an open-source solution that allows users to develop applications on Linux Measurement Computing Corporation, a designer and manufacturer of data acquisition devices has announced the release of the MCC 172 Integrated Electronic Piezoelectric (IEPE) Measurement Hardware Attached on Top (HAT) for Raspberry Pi. Ideal for […]

Delivers rapid and convenient car-key connectivity over extended distances Compliant with the Digital Key Release 2.0 standard by the Car Connectivity Consortium and certified by NFC Forum STMicroelectronics has announced a new addition to its digital car key portfolio of ST25R near-field communication (NFC) reader ICs, the ST25R3920. The new device introduces enhanced features for better […]

Delivers rapid and convenient car-key connectivity over extended distances Compliant with the Digital Key Release 2.0 standard by the Car Connectivity Consortium and certified by NFC Forum STMicroelectronics has announced a new addition to its digital car key portfolio of ST25R near-field communication (NFC) reader ICs, the ST25R3920. The new device introduces enhanced features for better […]